Model context protocol (MCP) refers to a structured approach or standard that governs how context is gathered, maintained and delivered to AI models, particularly large language models (LLMs) during inference. It defines the types of data (e.g., user intent, historical interactions, permissions) that can be injected into the model prompt or session to improve output relevance, personalization and safety.

By establishing protocols for what contextual information should be included and how it’s formatted, organizations can ensure consistent, secure and interpretable AI responses across applications. This is especially important in enterprise environments where compliance, traceability and contextual accuracy are critical for AI effectiveness.

“Agentic systems rely on real-time access to interaction history, behavioral signals and operational context. When data is fragmented across systems, the AI’s ability to respond intelligently is compromised. Clean, unified data creates the conditions for continuity, context and relevance.”

Rahul Garg, VP, Product, AI and Self-service, Genesys

Model context protocol for enterprise businesses

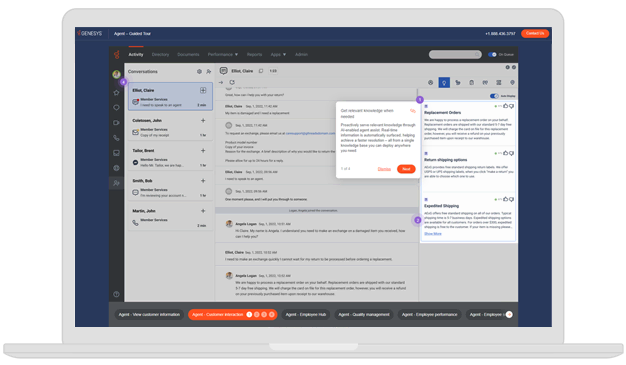

Model context protocol is a structured method for supplying AI systems with the right data and context to deliver accurate, reliable outputs in real time. For enterprises, especially those using generative AI across departments or customer channels, model context protocol ensures that each AI interaction is informed by relevant business rules, user information, past interactions and operational policies. Instead of relying on static prompts or siloed data, model context protocol dynamically connects AI models to live enterprise systems like CRM systems, knowledge bases or ticketing platforms. This helps the AI understand “who,” “what” and “why” in each situation, allowing it to act with intelligence, consistency and compliance.

By standardizing how context is delivered to models, MCP boosts trust, reduces hallucinations and enables secure, enterprise-grade AI use at scale.

![Resource thumb [kiwibank]](https://www.genesys.com/media/resource-thumb_Kiwibank-1.webp)