Artificial intelligence (AI) compliance refers to the process of ensuring that artificial intelligence systems adhere to legal, regulatory and ethical standards. This includes data protection laws (like GDPR), industry-specific requirements and internal policies regarding fairness, transparency and accountability.

As AI becomes central to decision-making and customer engagement, organizations must demonstrate that their systems operate responsibly and within the bounds of governance frameworks. AI compliance not only reduces legal risks but also builds trust with customers and stakeholders, helping brands lead responsibly in the AI era.

AI compliance for enterprise contact centers

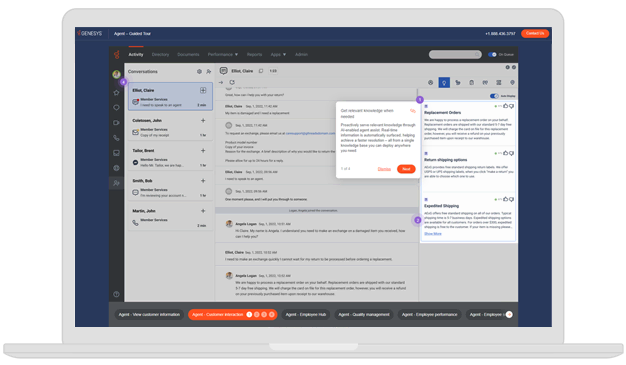

AI compliance in enterprise contact centers means making sure that artificial intelligence tools follow rules that protect customers and the company. These rules cover things like privacy, data security, fairness and transparency. Contact centers often handle sensitive information, like credit card numbers or personal details, so it’s important that AI systems don’t misuse this data.

For example, if an AI tool is used to help agents respond to customers, it must follow laws like GDPR (in Europe) or CCPA (in California) that protect personal data. AI must also treat all customers fairly, without bias or discrimination. If the system suggests answers or takes actions, it should be clear how those decisions are made.

Companies also need to monitor their AI tools to make sure they keep working as expected and don’t cause harm. This includes regular testing and giving people a way to report problems. By following AI compliance rules, contact centers build trust with customers and avoid legal trouble.