Your Genesys Blog Subscription has been confirmed!

Please add genesys@email.genesys.com to your safe sender list to ensure you receive the weekly blog notifications.

Subscribe to our free newsletter and get blog updates in your inbox

Don't Show This Again.

A virtual agent reroutes a customer’s billing issue to a backend system, flags an anomaly for human review and proposes a refund before the customer even asks for one. This is a scenario that will soon become reality with agentic AI.

Artificial intelligence (AI) now operates on the front lines of business. It responds to nuance, adapts in real time and drives outcomes with less reliance on human input. These systems recognize goals and adjust their behavior to meet them, often across departments, touchpoints or even partner ecosystems.

We’re entering the agentic era with sophisticated technology capable of interpreting intent and coordinating actions toward defined objectives. And without clear governance, every AI-powered decision carries new risk.

Plenty of vendors are quick to label their products as “autonomous” AI. That’s a stretch. Today’s AI isn’t fully autonomous. It cannot independently devise strategies or navigate complex environments without human scaffolding.

Right now, we’re in a phase of AI advancement that’s semi-autonomous. It’s reliant on predefined contexts and continuous human oversight.

The industry’s casual conflation of “semi-autonomous” with “autonomous” is both imprecise and potentially misleading. Flashy launches often lack substance. Demos showcase power and speed but rarely address transparency, control or ethical guardrails. In a world where AI executes thousands of micro-decisions per minute, skipping over governance feels like removing the brakes from a car because you upgraded the engine.

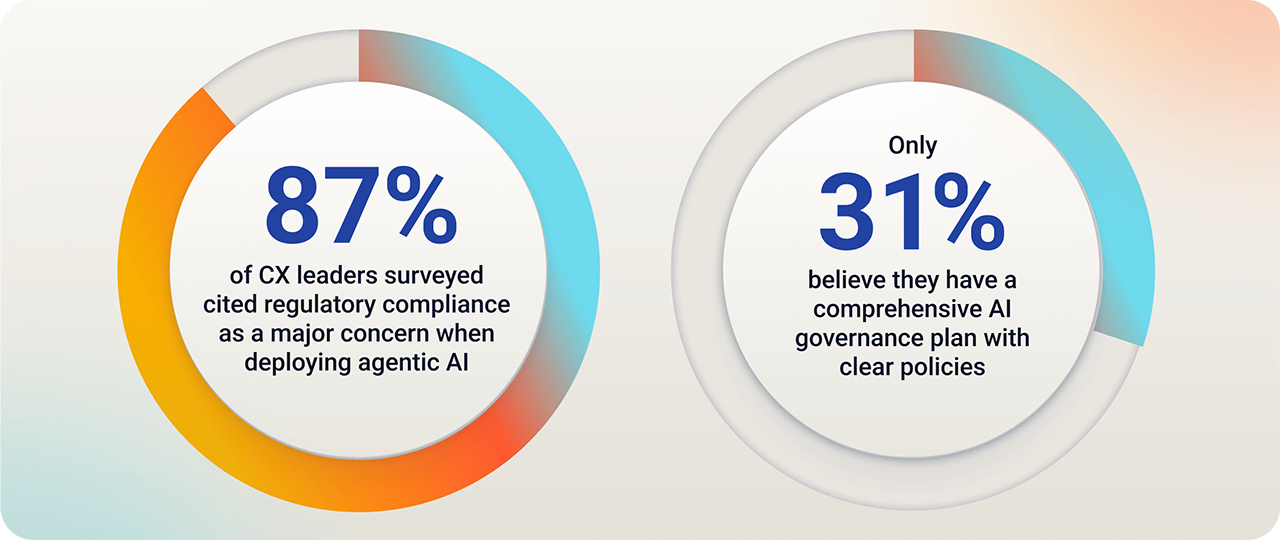

According to a new Genesys report, 87% of CX leaders surveyed cited regulatory compliance as a major concern when deploying agentic AI. And only 31% believe they have a comprehensive AI governance plan with clear policies. Even more alarming, 28% of those surveyed at organizations with no AI governance policy feel they’re ready to deploy agentic AI.

This disconnect is dangerous. If left unchecked, agentic AI can deviate from policy, generate false information and undermine customer trust in seconds.

Safeguards cannot be retrofitted. To mitigate these risks, governance must evolve from reactive compliance to proactive orchestration.

Innovation is racing ahead, but many organizations are struggling to install the guardrails. This gap between acceleration and control is becoming a strategic liability.

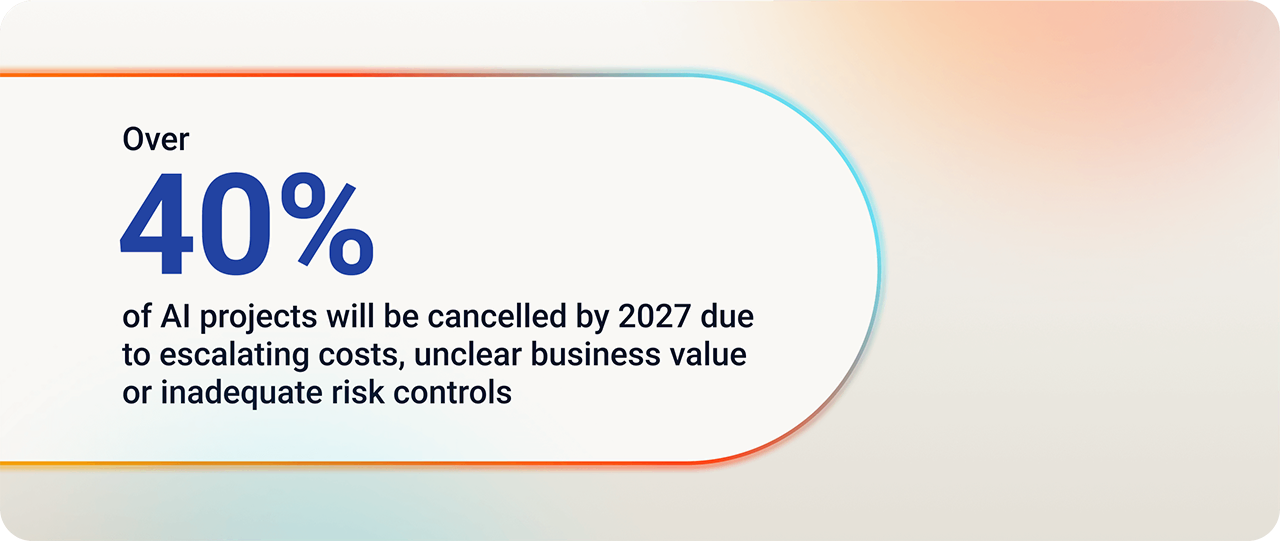

According to Gartner®, “over 40% of AI projects will be cancelled by 2027 due to escalating costs, unclear business value or inadequate risk controls.”

Let that sink in. Nearly half of AI initiatives may fail and lack of governance will be a strong contributor.

The implications are clear. When AI becomes deeply embedded in customer experiences, it’s not enough to ask what it can do. We also need to ask what it should do — and if we can trust it to behave in a way that protects customer relationships, brand integrity and regulatory standing.

Failing to put AI governance at the center means exposing your business to operational risk and reputational damage. It also puts real limits on how far and how fast AI can scale.

Old-school automation follows rules. The bot hears a request, runs a script and produces an outcome. It’s predictable, rigid and useful up to a point.

Agentic systems behave differently. They work toward goals, evaluate the environment and adjust their actions to match it. No one has to program every possible path because the AI finds its own route.

This opens up new territory. Virtual agents start to feel less like digital assistants and more like collaborative problem-solvers. They can recognize when a situation needs escalation, can adapt conversations at a moment’s notice and ask for clarification instead of making blind assumptions. The software gets smarter but also harder to pin down, and the same flexibility that makes agentic AI so powerful also introduces risk.

That’s why foundational governance is imperative. From Day One, organizations must build systems that are explainable, values-aligned and flexible without sacrificing control.

Without embedded guardrails, independent AI can introduce risk faster than an organization can respond. Missteps won’t be theoretical. They’ll be customer-facing.

Companies must define the rules early. What’s in bounds, what isn’t and how semi-autonomous AI systems should respond when uncertainty creeps in. It also means setting up tools to monitor behavior and course-correct in real time.

When an AI makes choices instead of following scripts, governance plays a different role. It keeps the AI constrained and teaches it what matters. This establishes that judgment aligns with company values and customer expectations.

We design AI at Genesys with accountability at its core. Our commitment to AI transparency and governance is a foundational principle. Every product we build reflects that promise. We embed guardrails, clarity and control directly into the architecture, so businesses don’t have to bolt on compliance later.

By prioritizing privacy, fairness and security from the start, we make it easier for organizations to use AI confidently and responsibly. Our goal is to deliver AI that earns trust, enhances customer experiences and stays aligned with the values that matter most.

This trust layer keeps your AI grounded. It tracks behavior in real time, flags when things go wrong and keeps a detailed log of every move for audits or deeper analysis.

Supervisors have live oversight with the power to intervene. They can pause a decision, override a response or steer the system when human judgment takes priority.

When trust, fairness and privacy are foundational, learning happens under a watchful eye. Feedback loops reinforce good outcomes and filter out the noise. AI systems know when to hold back especially when regulated data appears or escalation protocols apply. The result is an AI that stays within the lines even when the terrain shifts.

This isn’t traditional AI governance. It’s what we call an “agentic constitution” that can encode your values, policies and goals directly into every decision the AI makes.

This is the rationale behind Genesys Cloud™ AI Studio and its flagship capability Genesys Cloud™ AI Guides. Together, they empower you to build smarter, responsible AI experiences right now with your brand values firmly in control.

AI Guides enables organizations to create agentic experiences that are goal-oriented, dynamic and accountable. Business users can describe what the AI should achieve, and AI Guides translates that vision into defined, traceable behaviors within your ethical boundaries.

Genesys Cloud AI Studio capabilities, AI Guides and Custom Conversation Summaries are now available.

At Genesys, we map AI maturity across six Levels of Experience Orchestration. Level 1 starts with static automation, rigid scripts, fixed flows and manual interventions. At Level 2 comes responsiveness and systems that trigger reactions but still follow rules without context. We believe most organizations are here.

Level 3 brings in prediction. Now AI can anticipate, but it still leans on humans to connect the dots.

AI Guides move you into Level 4 semi-autonomous intelligence. Here, AI takes initiative. It respects your boundaries and learns from outcomes to improve without constant retraining. Teams shift from managing tasks to steering intelligent systems that actually help carry the load.

With disciplined design, AI Guides sets the stage for universal agentic orchestration (Level 5). Multiple AI agents working together, sharing context, rebalancing priorities, and refining strategies independently. The system learns as a whole continuously and cooperatively.

Genesys gives you the tools to climb each level of AI maturity deliberately. While others rush to keep up, you can focus on creating what works. The pieces are here. The direction is clear. What you do next is what sets you apart. Let’s lead responsibly and build boldly.

Learn more about the five core principles that inform how we build, evolve and deploy AI systems to support ethical, safe and human-centered outcomes.

Gartner Press Release, Gartner Predicts Over 40% of Agentic AI Projects Will Be Canceled by End of 2027, June 25, 2025

GARTNER is a registered trademark and service mark of Gartner, Inc. and/or its affiliates in the U.S. and internationally and is used herein with permission. All rights reserved.

Subscribe to our free newsletter and get blog updates in your inbox.